The Car is the Computer: Nissan draws upon Silicon Valley

0 minutes to Innovation

Nissan Advanced Technical Center – Silicon Valley (NATC-SV) is Nissan's Center of Excellence for Artificial Intelligence and Next-generation Mobility solutions in Silicon Valley. It is where our researchers and scientists focus on Materials Science (for superior battery technology), Robotics Science (for Autonomous Drive), Mathematical Sciences (for AI/Machine Learning, Large Language Models - LLM, Large Multimodal Models – LMM and new AI solutions), Computer Science & Engineering (for custom silicon solutions for the growing demands for computational power in the vehicle), and Data and Social Sciences (for safety and quality of the driving experience and, in the case of electric cars, advances in infrastructure). All the effort is aimed at improving mobility today and inventing real solutions for tomorrow. Creating our Center in Silicon Valley was no accident. Being in Silicon Valley means we can draw from, collaborate on, and contribute to innovations happening here in the heart of San Francisco Bay Area. We benefit from the proximity to world renowned universities. Stanford University is just around the corner and UC Berkeley is a mere hop to the other side of the bay. We get access to professors, researchers, scientists, and engineers creating technologies that drive our world (and cars too). As we embarked on architecting and developing our next generation of autonomous driving solutions, we cast a net looking for ways to do something big and not just an incremental step in compute capabilities. We approach automotive software and custom silicon development as a co-design project.

Figure 1: Nissan Advanced Technology Center, Silicon Valley (NATC-SV)

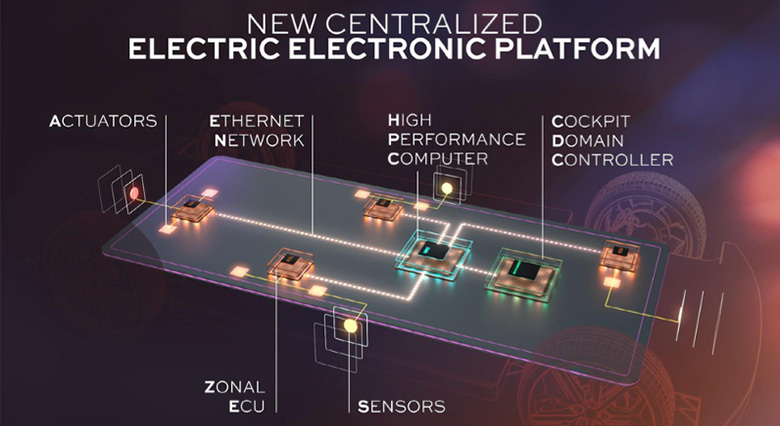

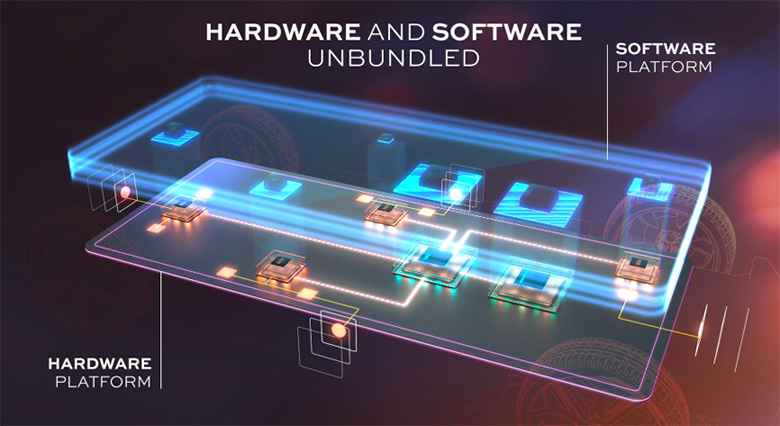

If you live in Silicon Valley, you might have seen some of our modified cars for Autonomous Drive (AD) Level 4 traveling along our streets, roads, freeways and highways. These cars are becoming "supercomputers on wheels" and forcing a review of Electrical & Electronics (EE) Architecture in the car. The concept of Software-Defined Vehicles (SDV's) was advanced to meet this new context. SDV clearly defines software and hardware domains, and provides efficiency in construction, which implies lower costs. Efficiency is also extended to maintenance of the vehicle by adding over the air (OTA) capabilities to update car features. On the hardware level, the OTA update of all the car features is enabled by a centralized computing architecture in the car. This centralized computing architecture oversees everything happening in the car. Myriad sensor data is collected in the car zones, aggregated and sent to the central computer using high-speed low latency in-car network. Those sensor data are then processed and decisions come back to the many actuators in the zones using the same high-speed network. This high-speed network is thus an advance that minimize the wiring in the car, lowering costs and weight. All this also brings new concerns of isolation, security and safety of data and people in SDV's that are at the cutting edge of research and technology development.

Similar to late Google VP and Fellow Luiz Andre Barroso's "The Datacenter is the computer", which instigated the view that the datacenter is to be thought as a massive computer instead of a collection of individual servers, we, at Nissan, instigate the thought that "The Car is the computer". And with an even more spectacular effect since SDVs materialize the convergence of 3 industries: (1) robotics industry (the autonomous driving car is a robot on 4 wheels), (2) AI industry (the car will see the world, understand the world, and take decisions faster and better than any human driver), and (3) High-Performance computing industry (both computer and network industry together).

Hardware underpinnings of Software-Defined Vehicles (SDV)

Source: Alliance Renault Nissan

Hardware and software planes of SDV

Figure 2: Software-Defined Vehicles (SDV's) centralized electric electronics platform and its separation of hardware and software domains.

SDV's are establishing the view that future cars will be “supercomputers on wheels".

7 minutes to Cloud Native and AI Compute

While considering our in-car computational needs, we pushed for composing our custom chipletized silicon System-on-Chip (SoC) with advanced datacenter-class Arm CPU cores, GPU cores, and domain-specific accelerators designed by us at NATC-SV. We have benefited from the fact that the software we were interested in running fast had all the critical algorithms and components already developed at our NATC-SV facility. In the interest of mounting an efficient effort towards this new custom SoC, we decided to establish architectural commonality among (a) our development platform, (b) our deployment platform for in-vehicle demonstrations and (c) our chipletized custom SoC architecture.

Figure 3: Ampere Computing: a great partner just 7 minutes away from NATC-SV. Left to right, Luiz Franca-Neto (NATC-SV), Mark Glenski, Joe Speed and Brett Collingwood, all from Ampere Computing

We were greatly helped by being in Silicon Valley. As it frequently happens in the Valley, we only needed to exercise our former professional network, our colleagues from Intel, AMD, Arm and several start-ups, to find willing partners to collaborate with us. It just happened that one of the willing partners was just 7 minutes from our NATC-SV center: Ampere Computing.

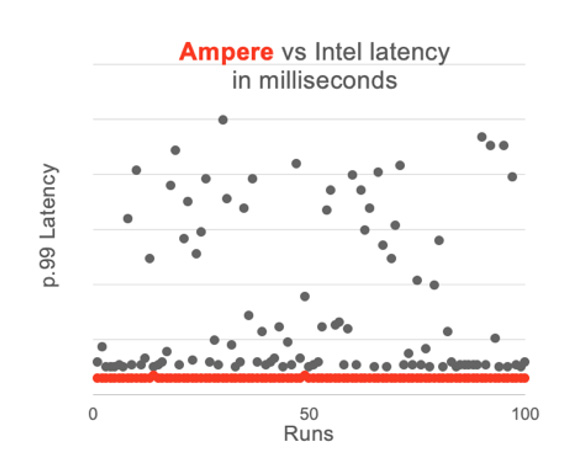

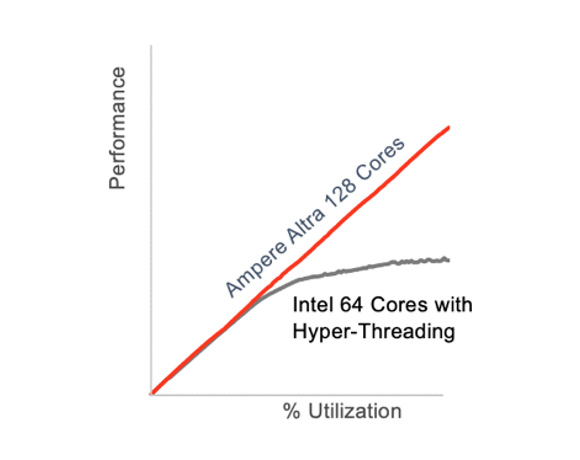

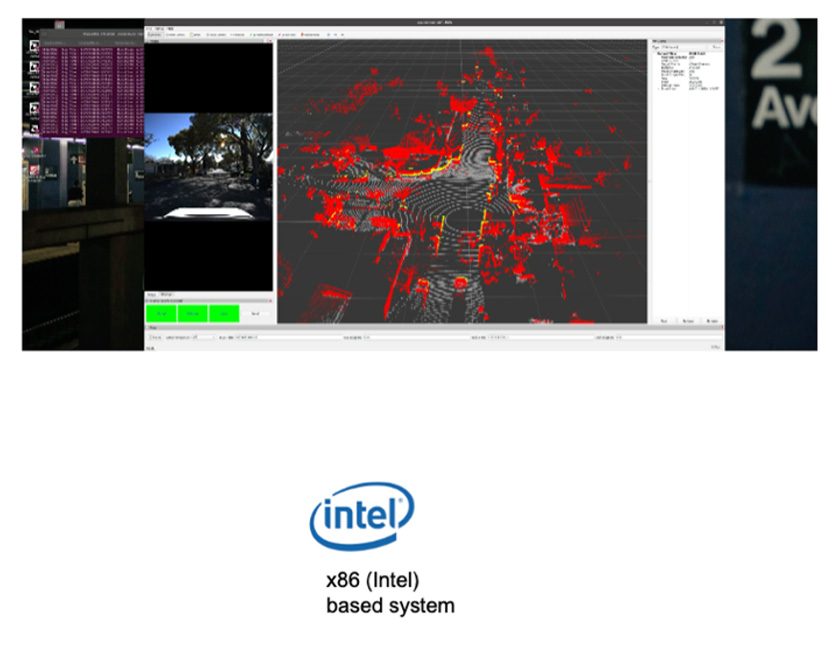

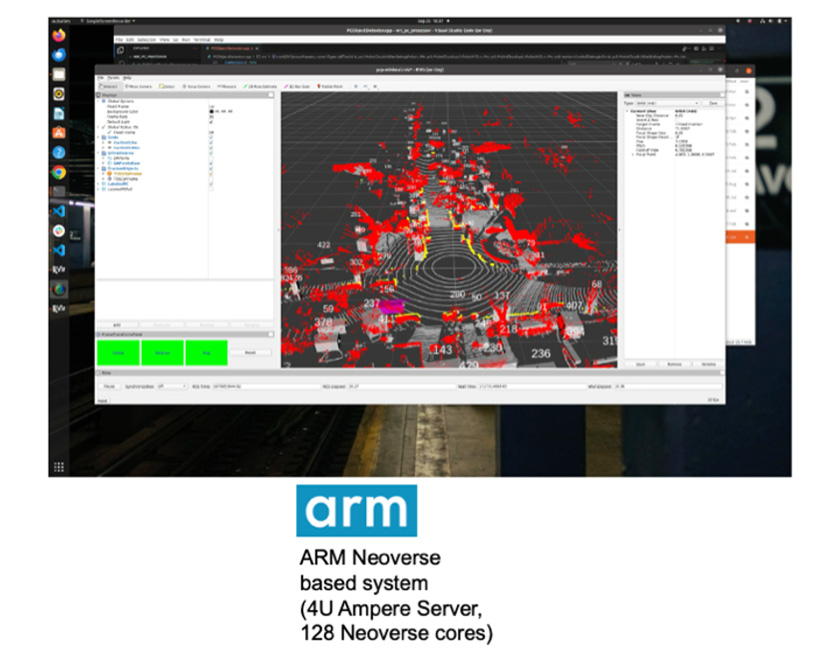

Since all our autonomous Level 4 modified vehicles ran our algorithms on x86 CPUs with NVIDIA GPUs, we wanted to quickly confirm whether advanced Arm CPU cores had a chance. Ampere makes big Arm-based processors. Really big ones with 128, 192, and more cores. These are datacenter-class Arm-based processors used from edge to cloud. Cloud providers prize Ampere's ability to support large numbers of “tenants" (users) sharing the same processor, enjoying predictably good response times, freedom-from-interference, and strong security. This came in very handy since we also expected our custom SoC to be of datacenter-class performance.

Predictable Low Latency

Consistent Throughput

Linear Scaling

Figure 4: Ampere vs Intel: bringing ARM Neoverse based CPUs to datacenter-class solutions

All this translated well to robotics including autonomous driving. All this means having many workloads running simultaneously, with predictable latency, without interfering with one another. So, sensor fusion, perception pipelines, localization, path planning, and much more can have a chance to run on Arm Neoverse cores. We brought AD Level 4 stack to Ampere Arm-based processor systems with NVIDIA GPUs with great success.

Figure 5: Autonomous Drive Level 4 moving from Intel-based systems with NVIDIA GPU to ARM-based systems with NVIDIA GPU

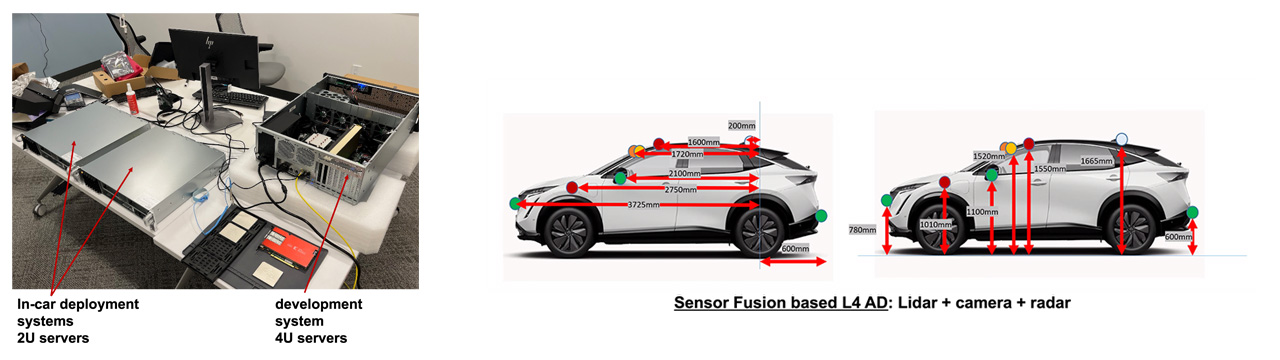

After that successful transfer of our AD Level 4 to ARM based systems, the vision was clear for us to further develop our facilities for improved AD solutions with sensor fusion. Several workstations and servers based on Ampere processors were purchased.

Figure 6: Ampere Arm-based systems with NVIDIA GPUs and FPGAs (to emulate our future silicon specialized accelerators) in demonstrations of our AD Level 4 algorithms and software

17 minutes to Fast Start

Pace picked up. It turns out that Ampere does not just have technology. They have friends too. ASRock Rack US in Fremont 17 minutes away custom built our first Ampere server with FPGAs & NVIDIA GPUs. It was a 4U server, large enough to become our workhorse in development, with easy access for maintenance and addition of all the PCIe accelerator cards. We jokingly named this first server "Tiny" who makes a cameo appearance in figure 6.

13 minutes to power Autonomous Vehicles

We love our ASRock Rack Ampere AI servers but they are too large to go into our autonomous driving R&D fleet vehicles. Meanwhile 13 minutes away Roger Chen and team at Supermicro had designed a very compact, durable and powerful Ampere telco Edge AI server for 5G base stations and other challenging environments. With Supermicro's help we were able to ruggedize these and equip our R&D fleet. And despite the compact dimensions we are able to load them up, adding a 2nd accelerator, a high-end FPGA alongside the NVIDIA GPUs that Supermicro delivered in those servers.

2 hours 25 minutes to AI Arm Developer Desktops

To enable faster delivery of better software and algorithms, we thought it important to equip our autonomous driving developers with AI developer desktops using the same 128 core Ampere processors we're designing into our R&D vehicles. So we commissioned System76 in Denver Colorado 2 hours 25 minutes away by plane. While not physically just down the street, the Denver "Silicon Mountain" hotspot of innovation is very much the kindred spirit of Silicon Valley. System76 are brilliant engineers and meticulous craftsman, so Nissan having System76 design, manufacture and support our AI developer desktops just a short flight and 1 time zone away has been immensely helpful for us. Ampere visited their factory to inspected Nissan's "Thelio Astra" AI developer desktops during product development and gave us wonderful feedback.

Figure 7: System76 Thelio Astra, autonomous racing maven Will Bryan's reaction, and Ampere car guy Joe Speed and

chief evangelist Sean Varley at Arm Silicon Valley hosted autonomous driving meetup 12 minutes from NATC-SV.

Now and towards Custom Silicon

As far as development infrastructure goes, we're not done. We're working with the 192 core AmpereOne processors. Our colleagues from Robotics Science and Mathematical Sciences at NATC-SV continue to improve our Autonomous Drive (AD) L4 algorithms. We are bringing AI to everything in the car. AI will improve autonomy and also the interactions and experience of the people in the car. In doing so, we identified the need for specialized hardware acceleration in several of our algorithms. And from this, we moved to start our custom silicon development, compilers and runtime.

For historical reasons, the necessary software tools for custom silicon development run on x86 machines, but not all of them run yet on Arm-based machines (but will). We adapted. Our custom silicon flow use cloud services from EDA companies running on x86 machines and Arm-based machines in our Center.

Our journey is just starting. Beyond autonomy and AI, much of the legacy ECU software will be moved to the central computer in our SDV architecture. Automotive ECUs (vehicle computers) are also Arm-based and we will add the necessary additional cores in another chiplet in our SoC. Hopefully, with the use of virtualization, much better utilization will be achieved in this central computer for ECU tasks. This will further justify SDV as an efficient path to lower number of Arm cores, lower cost and lower power solutions.

At this point a line from Linus Torvalds comes to mind: "Cross-development is pointless and stupid when the alternative is to just develop and deploy on the same platform." x86 had won the data center because Intel PCs were what people developed on. We are unifying everything around Arm architecture. We strive to having AD and AI development of software and deployment into modified vehicles, all on Arm based systems. Then, custom silicon development on Arm workstations and servers. Finally, we plan to have Arm based custom silicon with specialized accelerators developed at NATC-SV, where we also develop compilers and runtime. The expectation is that with such a common Arm-based solution, development, verification, validations and deployment can only be more efficient and less error prone.

As mentioned earlier, the future will bring us vehicles which will look a lot like supercomputers on wheels. SDVs will make clear "the Car is the Computer". And it will be a superb Arm based computer.

__

Luiz M Franca-Neto, Ph.D.

Domain Head for Computer Architecture, Compilers & Runtime research

Nissan Advanced Technology Center, Silicon Valley (NATC-SV)